Update on AI-Driven Electricity Demand in the U.S. and China

Goldman's latest report shows that AI progress is not just about LLMs and Data

AI Proem’s first few articles on AI infrastructure were published nearly a year ago. We covered everything from the surge in energy consumption driven by the AI boom to why renewable energy wouldn't be able to replace traditional energy sources during this surge in demand, the energy bottleneck in the U.S., and Canada’s potential opportunity.

Well, today, I want to loop back to the relationship between AI, Data Centers, and Energy and provide an update on that front.

See some relevant readings here:

AI Arms Race Far From Over: Chips is Only Half the Game, Infrastructure is the Other

Trump 2.0: Infrastructure Investment, Traditional Energy Resurgence, and Foreign Capital

The Jevons Paradox in AI Infrastructure: DeepSeek Efficiency Breakthroughs to Drive Energy Demand

Johor, Malaysia: AI Data Centers Drive Investment, But Infrastructure Challenges Persist

Canada Should Prioritize Growth and Position Itself as an AI Infrastructure Leader

Power Demand Continues to Increase

As we know, a big part of AI development right now is dependent on electricity. The race to build ever-larger data centers is reshaping national power grids, driving record energy demand in both the U.S. and China.

Reuters reported today that U.S. power consumption will hit record highs in 2025 and 2026, the Energy Information Administration said in its short-term energy outlook. And we’ve seen the Trump administration greenlighting power plants and encouraging energy capacity buildup at a faster rate than the country has seen in decades.

The EIA projected power demand will rise to 4,187 TWh in 2025 and 4,305 TWh in 2026, up from a record 4,097 TWh in 2024. This surge is largely driven by cryptocurrency and AI demand.

As written before, in the energy race, China has been far ahead with decades of strategic planning in building out renewable energy plants, from hydro to solar to wind farms, across the nation. But now American corporations are catching on and are also trying to capitalize on the AI boom as well as provide the necessary power to supercharge the data centers filled with GPUs.

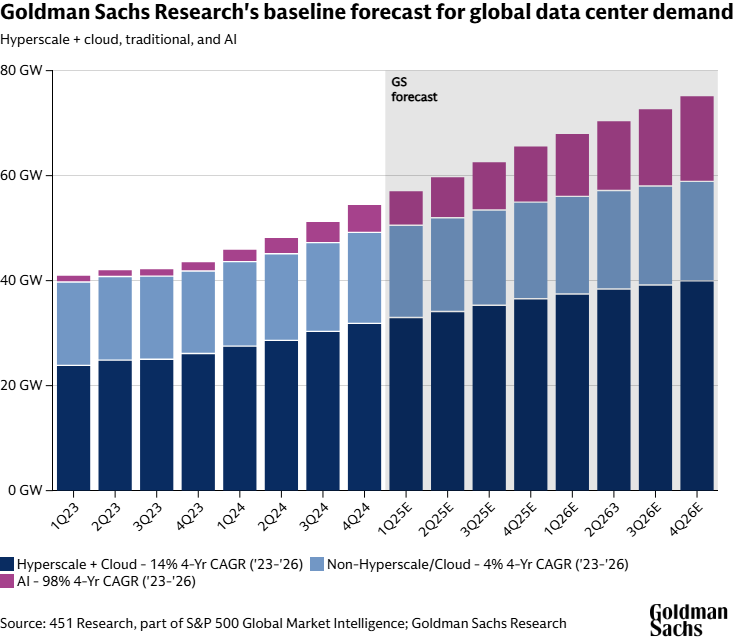

Goldman Sachs Research forecasts that data centers will increase their share of global power demand from 1–2% in 2023 to 3–4% by 2030. In the U.S., that share could more than double, from 4% in 2023 to ~9% by 2030.

“If global data center growth in 2030 vs. 2023 levels were its own country, it would be a top 10 global power consumer,” according to Goldman Sachs Research analysts Jim Schneider, Carly Davenport, and Brian Singer.

Furthermore, based on Goldman Sachs’ research, the current demand for energy in the U.S. is 62 gigawatts (GW), split across cloud workloads (~58%), traditional workloads (~31%), and AI (~13%). AI is projected to grow to 28% of the total by 2027, while cloud drops to 50% and traditional workloads fall to 21%.

Meanwhile, the IEA projects global data-center electricity use to ~945 TWh by 2030, doubling from 2024, with AI-optimized facilities as the dominant driver. That’s nearly 3% of global electricity in their base case. And the geographic breakdown of electricity used by data centers in 2024, with the U.S. accounting for 45% of the usage, China 25%, and Europe 15%.

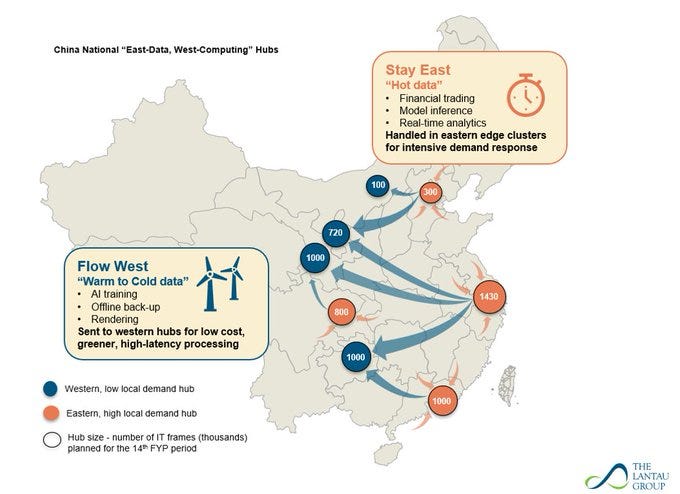

Remaining at the lead are the U.S. and China. In the U.S., demand is clustered in Northern Virginia and Arizona, driving up bills and environmental concerns. In China, it’s concentrated around Beijing, Shanghai, and satellite economic zones, where the government has steered businesses over the last decade.

, a China energy expert at the Lantau Group, noted on X: “According to China's renewable consumption quota policy, all new data centers in these hub regions must buy at least 80% of their power from renewable sources.”He highlighted that this should be feasible in “blue hubs” rich in renewables, but more difficult in “red hubs.” [See graph below]

Adding that, outlooks vary by projections/ province, but forecasts suggest 60–70% of China’s new compute will land in these hub regions, subject to renewables quotas. Data centers currently account for ~2.5% of national power demand and could double by 2030.

Goldman Sachs estimates that 40% of incremental power demand from data centers will be met with renewables, and the rest will be met with natural gas. Still, the IEA cautions that coal, gas, and nuclear will remain in the mix and, more likely than not, renewables alone won’t be enough.

China, with its East Data, West Compute strategy, is notably optimizing its land resources, thus accessing lower electricity prices in the western regions to power the data demands on the eastern coast. While this all sounds like the optimal solution, the IEA says that realistically, the energy mix to power more AI data centers will also include coal, gas, and nuclear. See here why renewables cannot replace all energy sources.

New Kind of Data Center. More Capital Required

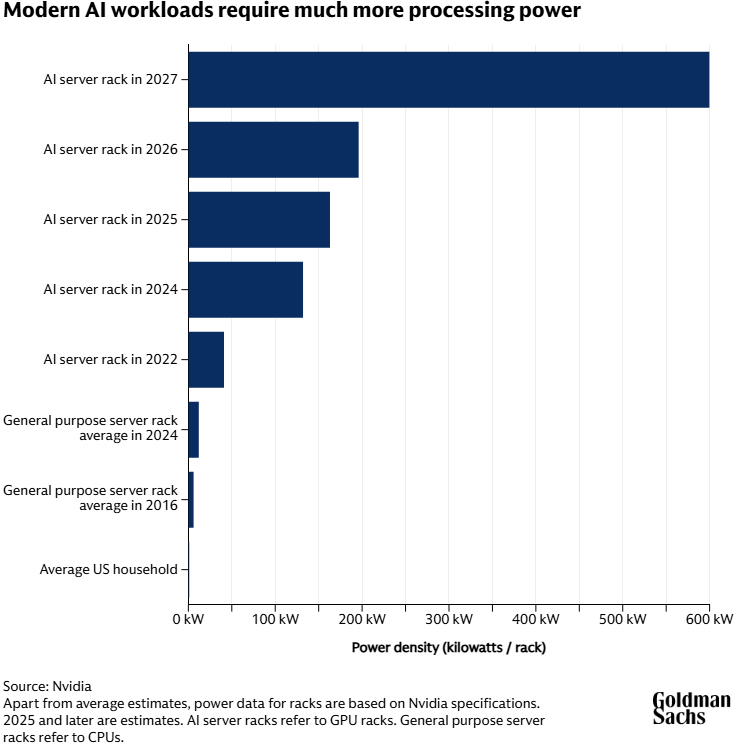

The thing is, the AI data centers are not the same as what data centers used to be. Modern AI workloads require multiple GPUs working concurrently. In 2022, a cutting-edge AI system only integrated eight GPUs into a single server. But by 2027, the leading system will likely have nearly 580 GPUs stacked up in racks and working together, which requires 600 kilowatts, and for context, that is equivalent to delivering enough power for 500 US homes.

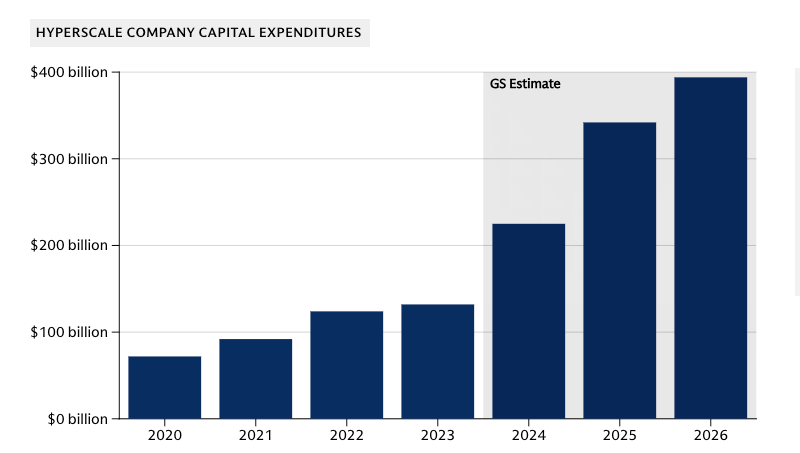

And capex continues to increase across hyperscalers, seen in each big tech’s earnings filings and announcements. Goldman Sachs projects that the five highest-spending U.S. hyperscale enterprises will have a combined spend of USD 736 billion in 2025 and 2026 for AI data center build-out.

The Love-Hate Relationship: Electricity, Data Centers, and AI

While talks about how AI will actually help optimize more efficient electricity generation, that has yet to be proven on a mass scale. The hurdles right now for more energy are 1) legitimate environmental concerns, 2) policy constraints, and 3) the ability for companies to ramp up.

On a human-race level, the question should be: if we cannot avoid the increasing need for data centers with the progression of AI development, is covering our earth with data centers the solution to our future?

On a nation-to-nation level, the U.S. and China are racing not just to build better models, but to secure the energy infrastructure that sustains them. In the U.S., hyperscaler capex will cross $700 billion in just two years, largely to supercharge AI data centers. In China, renewable energy mandates will tie AI expansion to the country’s green transition, although coal and gas will remain in the mix.

What’s clear is that AI has turned good old boring electricity into a new strategic resource. Whoever can deliver abundant, affordable, and sustainable power will not just run the best models, but they will set the pace for the global AI economy.