The Jevons Paradox in AI Infrastructure: DeepSeek Efficiency Breakthroughs to Drive Energy Demand

The AI Energy Paradox: Why Cheaper AI Will Drive Record Power Demand

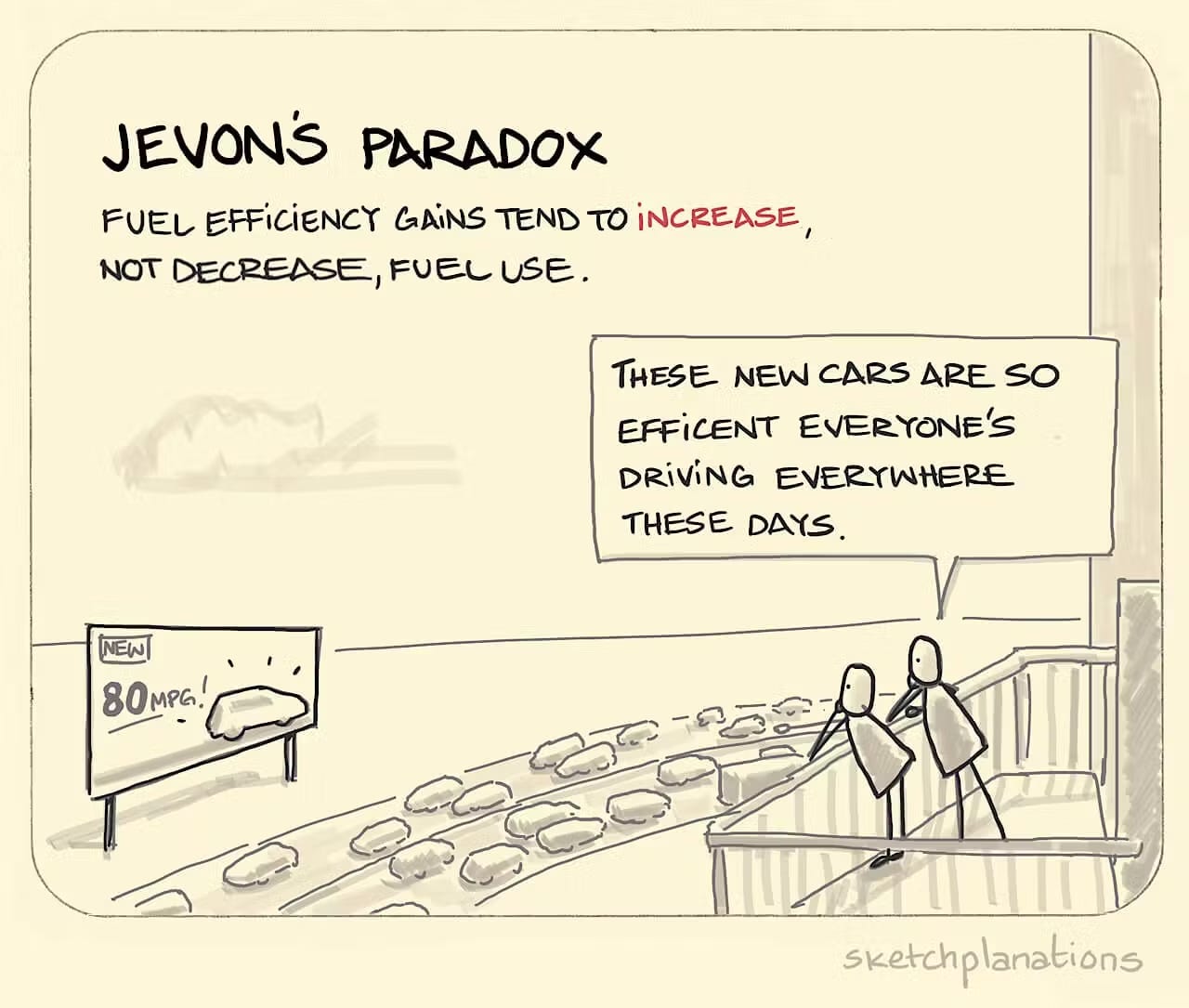

When steam engines became more efficient in the 1800s, coal consumption skyrocketed. As AI gets dramatically cheaper, history is about to repeat itself.

DeepSeek’s R1 release last week sent shockwaves through the U.S. power and technology sectors, causing significant declines in stock prices and raising doubts about future energy demands for AI infrastructure and the direction of AI capex.

Specifically, many power companies heavily linked to the surge in data centers within the tech industry experienced substantial drops in their stock prices. Despite being among the top performers in the S&P 500 earlier this year:

Constellation Energy: Down over 16%

Vistra Corp: Fell by over 16%

GE Vernova: Dropped approximately 18%

Talen Energy: Decreased by more than 15%

However, Microsoft CEO Satya Nadella was the first to say that this might be the wrong reaction to increased efficiency.

This was first published as a guest post for

. Please don’t forget to like, share, and subscribe if you find this valuable.Market Myopia: Misreading the Efficiency-Demand Cycle

Following the release of DeepSeek R1, energy stocks plunged, revealing a potential short-term misreading of energy economics or, more accurately, knee-jerk panic. Like 19th-century observers who thought coal demand would fall with the steam engine (by 100x, based on the JPM Asia DeepSeek report), sometimes markets conflate efficiency with sufficiency.

In a recent report, UBS analysis indeed confirmed this oversight: “While DeepSeek’s 97% cost reduction in AI training (vs. GPT-4) appears deflationary, it mirrors the internal combustion engine’s 100x efficiency leap, which drove 223x global oil demand growth post-1900.”

Here's why it's not as simple as "more efficient AI = less energy use":

Cheaper AI: First off, AI just got a whole lot more affordable. We're talking about a 97% price drop for using AI services, with costs now at $2.20 per million tokens compared to GPT-4's $81, according to JP Morgan's AI Adoption Index. This means tons of medium-sized businesses that couldn't afford AI before are now jumping on board - about 73% of mid-market firms, to be precise. It's like when smartphones suddenly became affordable - everyone wanted one.

Video: Next, consider how much of the internet now is in video format. According to a Bank of America Report, it's a lot - about 80%. The thing is, AI needs way more juice to handle video compared to just text - 3 to 5 times more compute power, as noted in JP Morgan's Compute Multiplier Report. So, as more companies use AI for video (think TikTok filters or YouTube recommendations), the energy demand will skyrocket. We're looking at a potential $47 billion latent demand pool here.

Edge AI: Lastly, we're putting AI everywhere—not just in big data centers but into our phones and street lamps. This "edge AI" is excellent for speed, but it means we need to upgrade a lot of equipment. JPMorgan reports that on-device AI processing boosts 5G base station power draw by 40–60%, necessitating 22 million new global nodes by 2030. Each of these uses more power than before.

So, while AI is becoming more efficient, we're using it for so many more things in so many places that total energy use is still set to climb. It's a bit like how fuel-efficient cars led to more driving, not less gas use overall.

For additional context, Scott Chamberlin, who builds tools to examine the environmental costs of certain digital activities, tested how much energy a GPU uses for a DeepSeek query and found that a 1,000-word response from the DeepSeek model took 17,800 joules to generate. As reported by MIT Technology Review, that is about what it takes to stream a 10-minute YouTube video. That’s because DeepSeek’s answers tended to be longer than those of other chatbots. So, although the model is more efficient, it didn’t decrease the overall amount of energy used, which is not like what people assumed.

Efficiency, in energy systems, is demand’s accelerant.

Microsoft CEO Satya Nadella crystallized the market’s myopia hours after DeepSeek’s launch, posting on X: “Jevons paradox strikes again! As AI gets more efficient and accessible, we will see its use skyrocket.”

Nadella’s economics lesson wasn’t just academic. It foreshadowed Microsoft’s $13 billion AI revenue run rate, which was disclosed days later during its FY25 Q2 call — up 175% year over year. His framing mirrors Meta’s playbook: Cheaper inference costs don’t reduce energy demand because AI can be embedded into every platform (which is cool and scary).

Suppose the R1 efficiencies are realized in all new models. The hyperscalers' AI Capex and energy usage budgets will likely remain strong in the long run. Because…

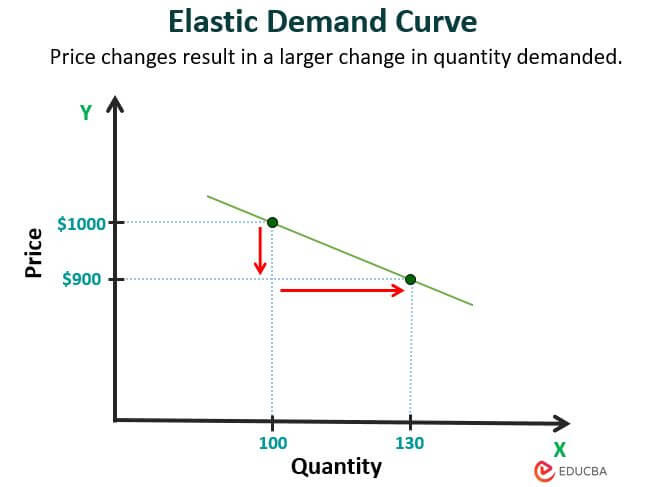

“When AI gets cheap, you know what’s going to happen? There’s going to be a lot more AI,” said Travis Kalanick, founder of Uber, on the All In Podcast. “I think the price elasticity on this one is actually positive. So, as the price goes down, the revenue and usage will go up (through the roof).”

AI isn’t inventing the wheel here. In a 2010 New Yorker article titled “The Efficiency Dilemma,” David Owen wrote, “The first fuel-economy regulations for U.S. cars—which were enacted in 1975, in response to the Arab oil embargo—were followed not by a steady decline in total U.S. motor-fuel consumption but by a long-term rise, as well as by increases in horsepower, curb weight, vehicle miles traveled (up a hundred percent since 1980), and car ownership (America has about fifty million more registered vehicles than licensed drivers).”

For the Econ nerds, I highly recommend the New Yorker article. It goes into the background of how the economists of that century were related and how John Maynard Keynes “found out about” William Stanley Jevons and thought of him as “one of the minds of the century.”

Jevons Paradox 2.0: The Three-Phase Demand Engine

So this leads to Jevons Paradox, which I first touched on in the piece about R1’s industry implications.

What is the Jevon Paradox? The Jevons paradox occurs when the effect of increased demand predominates, and the improved efficiency results in a faster rate of resource utilization. (Wikipedia)

In layman’s terms, when technology becomes cheaper, usage explodes—even if it feels just "good enough." For example, in the 1800s, burning coal became more efficient, but factories used more coal to power new machines instead of reducing demand.

Similarly, suppose AI becomes more affordable (DeepSeek R1 is currently much cheaper than other frontier models). In that case, we can expect startups, governments, consultants, hospitals, and even schools to use far more AI than they do today.

Just as Anthropic’s co-founder Dario Amodei wrote on his blog, “because the value of having a more intelligent system is so high,” it will lead to “companies to spend more, not less, on training models.” So if companies get more for their buck, then they will have more reason to invest more in AI and thus energy. Adding that, “the gains in cost efficiency end up entirely devoted to training smarter models, limited only by the company’s financial resources.”

The English economist and logician William Stanley Jevons’ 1865 insight—that efficiency gains paradoxically increase resource consumption—now basically governs AI’s infrastructure buildout through three evolutionary phases:

Phase 1: Overbuilding Precedes Overconsumption

In 1999, telecoms laid 28 million miles of unused “dark fiber,” which was mocked as wasteful until streaming video exploded bandwidth demand by 10,000x. Today’s parallel: OpenAI’s $500 billion Stargate Project targets 100-trillion-parameter models that are not yet needed but essential for 2030’s autonomous supply chains, which require real-time global logistics simulation.

Phase 2: Compression Fuels Complexity

DeepSeek R1’s 45:1 efficiency gain over GPT-4 follows this pattern: By making real-time 4K video translation commercially viable, it could triple AI’s inference workloads by 2028 (according to McKinsey’s The State of AI in 2024 report).

Phase 3: Infrastructure Demands Better Infrastructure

Just as broadband required five times more network hubs than dial-up, Meta’s $65B AI capex surge funds edge devices (smart glasses, AR headsets) that will necessitate 22 million AI-optimized 5G nodes. These nodes consume 40–60% more power than legacy systems, creating a self-reinforcing cycle in which more ingenious devices demand smarter grids.

Hyperscalers’ $1 Trillion Commitment: Building the Intelligence Furnace

For months, we’ve examined the energy needed to power data centers. We've also examined China's and America’s competitive advantages in driving top-down supply build-up. We’ve also explored renewable options, especially nuclear energy (SMRs), as the U.S. currently faces an energy shortage.

And those explorations weren’t completely wrong. According to an industry expert, the AI industry is indeed facing a major energy challenge, and U.S. cloud service providers (CSPs) are turning to nuclear energy, with its impressive 90%+ efficiency compared to solar's 50%, to meet ambitious carbon reduction goals (especially under Biden’s administration).

There have also been suggestions that since in the U.S., CSPs' electricity demands have already been outpacing grid capacity, driven by the rapid growth of Edge AI and increasingly complex models. This trend points to a future where AI compute and energy are bundled as a utility service, with customers paying based on their AI usage.

For consumers, the future of spending on AI could be like paying for water or electricity in our homes—the more you use, the more you pay. New technology often obviates the old way of doing things entirely.

And for hyperscalers, despite initial market reactions, after all this DeepSeek fuss, we aren’t seeing any of them lowering their AI Infrastructure spending in the near term, nor are the two superpowers lowering energy demand expectations.

The Stargate Project

OpenAI and SoftBank’s $500 billion infrastructure initiative, signed last month, symbolizes the most significant private-sector tech investment in history. The deal isn’t about raw compute but power grid dominance. It requires 12GW of sustained electricity (equating to Denmark’s grid capacity), and the demand size is insane. And Stargate’s architecture includes modular nuclear reactors to bypass overloaded utilities. Confirming what Microsoft’s CTO Kevin Scott said: “Training clusters are becoming national infrastructure.”

Meta’s Unwavered Capex Plan

Just days after DeepSeek’s release, Meta’s Zuckerberg raised 2025 AI spending to $60–65 billion (+50% year-on-year) during the latest earnings call, declaring, “I believe scaling up infrastructure is still an important long-term advantage.” This timing underscores a critical insight: far from curbing investments, efficiency breakthroughs like DeepSeek validate hyperscalers’ infrastructure arms race, unlike what many are panicking about.

Conclusion: The Intelligence Inflation Era

Last week’s energy and power sectors sell-off mirrors skeptics in 1865 in Jevons’ book The Coal Question, who thought more efficient coal would reduce coal demand (spoiler alert, it increased massively). DeepSeek’s efficiency leap isn’t a singularity—it’s the spark for AI’s future use-case explosion across three frontiers:

Energy Arbitrage: At $0.02/kWh parity (Goldman’s 2026 forecast in its AI’s Energy Tipping Point report), AI inference becomes cheaper than human labor for 23% of service jobs.

Systemic Complexity: According to Reuters, Walmart’s AI supply chain processes 6x more data than its 2023 system but uses 9x more energy—a Jevons tradeoff executives accept for 40% fewer stockouts.

Infrastructure Inertia: Once hyperscalers deploy 22 million AI nodes, their operational costs create irreversible momentum, similar to gas stations cementing fossil fuel dominance after the 1900s.

Given the short-term panic caused by energy stock sell-offs, some investors might forget that efficiency gains have always been the demand’s accelerant, not its limit.

The questions to ask ourselves are: 1) How can we be more mindful of preserving our earth while we KNOW that we need more energy for increased AI demand? 2) AI demand will only increase, as internet demand did. It will be seamlessly integrated into our daily lives and eventually normalized. How do we regulate it better?

The defining resource of the 21st century may not be about who controls artificial intelligence or computer programs but who has access to and can control the electricity needed to power these technologies.

Additional thoughts:

In the book Electrifying America: Social Meanings of a New Technology, 1880-1940, which many AI industry analysts have recommended recently, author David E. Nye highlighted key points about electricity, many of which can be applied to AI and its impact on society. He wrote that:

Electricity touched every part of American life, becoming an extension of political ideologies and shaping the image of the modern city.

Electrification was expressed in utopian ideas and became a focal point of various art forms and moral debate.

Electricity completely redefined domestic work, agricultural produce, and what it meant for efficiency for humanity.

Despite social/ safety/ ethical concerns, we humans only wanted more and more of it. In many ways, our discussions around AI have been similar.

Innovation that increases productivity isn’t new; we have seen it throughout history. When electricity became more accessible, our expectations for productivity only increased. The rationale is that if you increase the productivity of anything, you effectively reduce its implicit price because you get more return for the same money—which by default means that the demand will go up.

In my book Humanity i explore the intersection between AI and neuroscience and psychology . I love the dialogue your posts are creating. It is important for us all to understand the implications of AI and robotics in what it means to be human.

howardlack.com

https://spectrum.ieee.org/transformer-shortage

"the wait time to get a new transformer has doubled from 50 weeks in 2021 to nearly two years now, according to a report from Wood MacKenzie, an energy-analytics firm. The wait for the more specialized large power transformers (LPTs), which step up voltage from power stations to transmission lines, is up to four years. Costs have also climbed by 60 to 80 percent since 2020.

Causes are varied, from both demand, aging infrastruture, and damage from storms and war/sabotage. It’s not only impacting renewable energy projects, but new home construction, as permits were denied as a utility couldn’t get enough step-down transformers.

As for expanding capacity:

Transformer manufacturing used to be a cyclical business where demand ebbed and flowed—a longstanding pattern that created an ingrained way of thinking. Consequently, despite clear signs that electrical infrastructure is set for a sustained boom and that the old days aren’t coming back, many transformer manufacturers have been hesitant to increase capacity."

Hi Grace, hope this is interesting to you. I'd note that this is mostly from a Western perspective, China, (and probably Russia) apparently has been left out of this survey. Both Russia and China have increased their capacity in Transformer production, but most of this supply goes to domestic use as they build out infrastructure.