Bigger Isn't Always Better: Scale May Not Be the Future AI Strategy, RLHF Is

RLHF converts IQ into experience and makes mass AI diffusion more likely

Dear all,

As I skimmed the AI headlines from the past two weeks, one thing that jumped out was Alibaba’s Apsara Cloud event, which

covered extensively. The headline takeaways: (1) token usage is doubling every two to three months; (2) Alibaba plans an additional ~RMB 150–200B in annual investment on top of the previously announced RMB 380B over three years; and (3) global data center power consumption could be 10x higher by 2033 versus 2022.Taken together, this signals that BABA is going all in on AI across the stack, aiming to become a true full-stack AI services provider. For context, the big four U.S. cloud providers are collectively on pace to invest roughly US$400–$500 billion in 2025–2026. Scale still feels like what is in the headlines.

Today is a long and thoughtful piece, as I’d like to think. So please stay with me.

Now, every day brings something new in this sector, and time feels slippery. Yet in my own house, time has slowed. With my newly arrived two-week-old at home, the rhythm is just feeding, diapers, and naps on repeat. But in only 16 days, she’s gained nearly a third of her body weight. Growth feels exponential when the base is small.

Agentic AI Is the Future

Increasingly, I don’t see AI as “a tool” or merely “a tech thing,” and as I think about how AI will transform our society, it evokes Marc Andreessen’s famous essay, “Why Software Is Eating the World.” Now, the question is: how will AI practically eat the world?

If you’ve been following AI Proem, you know two threads have dominated my attention (obsession) recently: (1) launching the podcast, which features a stellar set of journalists, investors, and policy analysts covering China’s AI, and (2) trying to understand how agentic AI will reshape knowledge work. Greg Isenberg put it starkly: “What the app store did for software, agent builders could do for intelligence”.

The current underlying AI fundamentals remain very strong, and adoption is accelerating. While pre-training scaling has slowed, reinforcement learning (RL) is a genuine breakthrough, driving another step-function improvement in AI model capabilities.

At this point, it is clear that the current AI model capabilities are far ahead of applications. Some in Silicon Valley say that even if we pause frontier model development today, we probably have 10 years of application innovations ahead. But as application proliferation no longer needs to rely on pre-training and for the scaling law to continue to improve model performance. We can predict that the next 2 years, AI will likely become more economically useful and valuable through applications, and especially agentic AI.

Beyond the fact that you can tell I have been reading, listening, and resting with my little one. I have found some time to actually sit with my thoughts and ponder about a bigger theme that is behind these currents: does the scaling-law paradigm still make sense, or are we shifting toward something more grounded with reinforcement learning?

So here we go…

Reinforcement Learning Changes the Game

Back in January 2025, on AI Proem, we asked whether OpenAI’s o3 changed the scaling-law debate. Ten months later, I find myself finally coming to a more concrete framework on how the future of AI will be progressed in real-life use cases that may not necessarily require the biggest and latest LLM.

First, let’s understand some basic concepts.

Scaling means making the base model bigger, more data, more compute, more parameters, to unlock general capability (this we know, right?).

Reinforcement learning (RL) takes a different route: learning by doing. An AI agent attempts a task, receives a reward or penalty, adjusts, and tries again. When that feedback comes from people doing the job, that’s RL from human feedback (RLHF), the system optimizes for what actually matters in context: accuracy, tone, safety, and helpfulness.

Consider a sales follow-up. After a call, someone has to draft the email, log the right fields in Salesforce, and schedule the next touch. With RLHF, the model does reps on that workflow; experts grade each attempt; the system learns which phrasing lifts reply rates and which field mappings reduce rework.

Now, let me piece together some ideas/ concepts a16z Marc Andreessen, Professor Ethan Mollick, and Sequoia’s Pat Grady shared and how they have come together in my head to make sense of the future of AI.

Think “Balloon with Spikes” as AI’s New Path to Usefulness

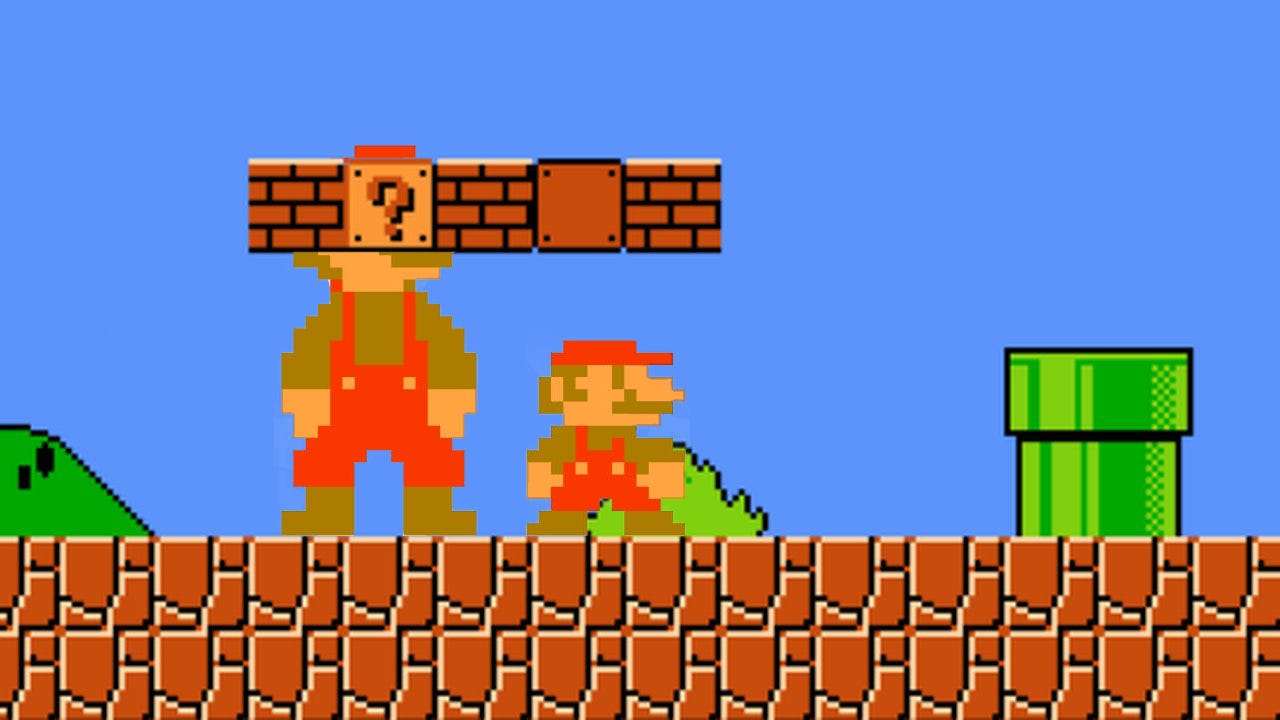

So in an investor meeting a month ago, Pat Grady shared a metaphor. He offered a twist to all of this scaling law vs. RL conundrum. Think of a model as a ball. Scaling is pumping more air to make the ball larger. RLHF is adding spikes, and each spike is a skill sharpened through practice. Add enough spikes and the ball’s reach grows where it matters, even if the core isn’t much bigger.

Scaling = pumping air (data, compute, model size) → bigger, flashier capabilities (all the PhDmath, physics, and benchmarks)

For two years, the tech and AI industry focused on inflating a giant beach ball, more data, more compute, more parameters, so it could roll into more use cases. That’s the scaling-law worldview: if the model isn’t good enough, pump in more air.

What if, instead of fighting for more air, we attached spikes to the ball, and then each spike represents a specific skill sharpened through practice? Add enough spikes and the ball doesn’t just get bigger, it also becomes more useful. It grips the ground.

I didn’t love this analogy at first, tbh it sounded a little gimmicky, but now I get the genius. Hear me out now.

If you look back at AlphaGo, it is the classic example. It didn’t memorize the game; it learned winning strategies by playing, getting rewarded for good moves, and being penalized for bad ones.

This matters because the scaling-law story has always been expensive and uncertain. Where does the following order of magnitude of “air” come from, think clean data, cheaper compute, bigger budgets?

From Child Prodigy to Applied Expertise

The original obsession/ thinking/ dream for many was that a single model would be able to write a sonnet, draft a contract, and debug a build script without breaking a sweat. But now, researchers can continue to chase the frontier model and test themselves against benchmarks, and business builders can stop arguing about whether the scaling curve is flattening and shift their thinking to how to better adopt RL.

What RL will do is that it doesn’t promise that a larger brain will magically generalize to your use case. It shows you how to teach the brain a job.

Picture the base model as a brilliant new graduate: breathtaking recall, broad knowledge, impeccable logic, and zero job experience. Left alone, it writes smart-sounding emails (with big SAT words) that miss the point or fill your CRM with beautifully wrong fields.

RL is the apprenticeship. A top salesperson role-plays with the agent, noting which phrasing disarms a skeptical buyer and which kills momentum. A nurse manager reviews clinical notes, rewarding clarity and penalizing omissions that create risk. A revenue-cycle lead grades denial letters on tone, compliance, and outcome. Providing the guidance needed to train an intern up, essentially, with knowledge, instinct, industry experience - a lot of things that are taught through time and mistakes. But now, each RL cycle leaves a trace. The agent builds the kind of muscle memory we usually associate with people who’ve done the job for years. That’s a spike.

And this is the direction that real-life AI use is happening all around us.

captures the pivot in his recent essay Real AI Agents and Real Work. “Generative AI has helped a lot of people do tasks since the original ChatGPT, but the limit was always a human user. AI makes mistakes and errors, so without a human guiding it on each step, nothing valuable could be accomplished. “Ultimately, “Agents, however, don’t have true agency in the human sense. For now, we need to decide what to do with them, and that will determine a lot about the future of work,” as Mollick had written here.

Bottlenecks Reversed

This is why the bottleneck has flipped. The limiting factor is no longer raw model IQ; it’s the scaffolding around it, data pipelines, reward design, expert time, and safe tool access.

Last year, many of us held our breath to see whether model capability would keep leaping forward and whether scaling laws would hold. That uncertainty has partly dissipated, replaced by a clearer roadmap for integrating AI into existing digital infrastructure. Today, the constraint isn’t raw intelligence. It’s scaffolding. (We touched on this in April in “The Future of SaaS Is a Hybrid of Agent & Platform + Vertical AI Innovation.”)

Do you have data pipelines that feed the agent a fresh, permissioned context? Can you define success tightly enough to prevent reward hacking or optimizing for “shorter” when you meant “clearer”? Are human coaches available and calibrated, or juggling two jobs and three dashboards? Does the agent have tool access with guardrails to do the task rather than merely describe it?

These aren’t mysteries of science. They’re the kinds of engineering and process questions strong organizations solve every day. That’s why the spiky-ball lens is optimistic without being naive. Scaling laws asked us to trust that if we inflated the center, usefulness would trickle down. RL gives us a map: pick a job, define a reward, run the reps, wire it into production, measure the lift, repeat. The uncertainty narrows from “Will we get another emergent capability?” to “Can we build and maintain this feedback loop?” That’s a better kind of risk.

Of course, RL has its own traps. Agents optimize what you measure, not what you intended, and some things cannot be conveyed through language: a gut feeling, an experience, an ick/a red flag. So do not expect an agent to be able to do that. But also, if you reward brevity, don’t be surprised by confident nonsense under 100 words.

In corporate terms, ambiguous domains such as mission, culture, and brand voice sometimes do not have a single correct answer, so you’ll need robust rubrics and periodic adjudication by senior experts. And as agents touch more systems, the blast radius of a permissions mistake grows; governance must be part of the design, not an afterthought. None of these are reasons to retreat; rather, they are reasons to treat RL as an ongoing operation rather than a one-off project.

Now, finally, you could think about the spikes as a kind of institutional IP. Every feedback run encodes know-how that competitors can’t download from a model card. Spend tilts from one-time training to repeatable programs: expert time, tooling, integration, and telemetry. So, in the future, when examining a business, maybe start asking, which workflows did RL sharpen? What’s the measured lift? How often does the agent relearn? Who are the coaches (are they credible and legit)?

Hot Take? Train those Spikes

So, did scaling laws hit a wall? Probably not, but who am I to even comment on this? I think in many ways, let’s borrow the words that people liked to use to describe DeepSeek’s R1 model when Chinese businesses started mass adopting it across products, “it’s good enough.” Echoing what I said at the top of the article, growth feels exponential when the starting point is small, but at what point does size stop mattering, and do we start building out our spikes through expertise and skills?

So the models we know today have hit a usefulness boundary. Bigger models will still matter, and that's for researchers and scientists to continue pursuing. But for businesses, the next mile is paved with reinforcement, which will allow AI to be more useful in practical ways.

Nearly ten months ago, I wrote with a kind of uncertainty, waiting to see if “more air” would carry us across the gap from just the chatbot and all these math/physics capabilities to something that could be more business-useful/commercial. Now, I feel like I really see a clearer vision with agentic AI: teach the job, wire the loop, sharpen the spike, move the metric, and then do it again for the next job.

RLHF teaches a model what “good” looks like for a specific job using human ratings and examples. And then an agent is trained model given access to your apps, data, and rules so it can complete multi-step tasks end-to-end. So in short, the relationship is that RLHF provides judgment and agents apply it in production.

To quote Mollick one last time, “Agents are here. They can do real work, and while that work is still limited, it is valuable and increasing.” So my point is, it’s on us to learn how to prompt, control, and identify AI work, because the next stage of knowledge work is in Agentic AI.

This is a piece that @Azeem Azhar would appreciate, I think. As well as @James Wang and @Kevin Xu.

On the question of scale, what architectual shifts do you forsee? Brilliant insights, as always.