The Great AI Power Shift - From AI Models to AI Applications

From billion-dollar models to indispensable applications – where value migrates next

Last Friday, I was invited to a Hong Kong University event on DeepSeek’s implications for the AI industry, which was hosted by the AI & Humanity Lab and the Hong Kong Ethics Lab. During the panel session on financial and business implications, Vince Feng (Adjunct Associate Professor, Faculty of Business and Economics; Former Managing Director and Head of North Asia of General Atlantic) said that AI applications will be where most value will be created and captured.

We’re still in the early days of AI. Like many others, he also used the metaphor that AI models are similar to building fiber networks during the early days of the Internet. He added that during this era, fiber companies laid out fiber networks nationwide, which enabled internet application companies such as Amazon, Google, and Facebook later on. Even then, it took a few app iterations to get to where we are now.

What he said resonated with me, as I have been thinking about how future value creation and value capture will happen in the application layer instead of the fundamental model layer.

"Big AI models will become commoditized... the real value lies in the applications built on top of them" – Nandan Nilekani, Infosys Chairman.

TL;DR

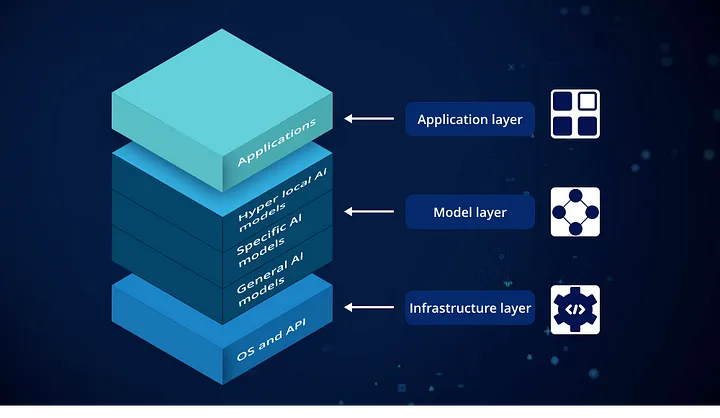

The AI industry's value is shifting from foundational models to applications that blend multiple AI models for specialized tasks.

Frontier AI models (GPT-4, Claude) face rapid depreciation as open-source alternatives (DeepSeek) erode their moats.

Apps like Perplexity and Cursor, once dismissed as “GPT Wrappers”, now dynamically combine models for superior task-specific performance, outperforming single-model platforms.

Competitive advantage lies in product execution, domain knowledge, and network effect, not model ownership.

Value now stems from solving real problems via adaptable AI integration, not owning the "best brain."

Frontier model providers may need vertical specialization (like cloud providers) as differentiation through generic models fades.

Investors have recently debated whether companies will and should continue to invest heavily in frontier models or whether it is time for the next phase of AI innovation, the application layer. Many argue that value creation is migrating from core model development to application-level solutions. Just like in the internet era, none of the fiber companies became trillion-dollar businesses, but a few of the application winners eventually did.

Gavin Baker, CIO and Managing Partner at Atreides Management, has spearheaded this conversation in Silicon Valley. He says that frontier LLMs will depreciate quickly and be commoditized sooner rather than later. He believes that the opportunities are really on the application end.

What are “GPT Wrappers”?

When we think of AI applications today, we often think of OpenAI’s ChatGPT, Bytedance’s Doubao, or these days, DeepSeek. Still, to many people’s surprise (my surprise, at least), beyond these, many of today's most popular AI applications do not use a single (self-developed) model to power them. They operate more like a restaurant chef - sourcing ingredients from multiple suppliers (models) while crafting unique experiences.” For example:

Cursor (an AI-powered code editor with a $2.6B valuation) now dynamically routes queries between Claude 3.5 Sonnet (for code refinement) and GPT-4o (for documentation synthesis), achieving 40% faster developer workflows than single-model approaches.

Perplexity (AI-driven answer engine; $9B+) maintains redundant model capacity like cloud providers keep backup generators, instantly switching between four AI providers during peak loads.

Glean (Enterprise AI platform; $4.6B) blends six AI models simultaneously in its enterprise search product, using proprietary algorithms to weight outputs based on query context.

These were all once called a “GPT wrapper”, a dismissive term that refers to a lightweight application or service that uses existing AI models or APIs to provide specific functionality, typically with minimal effort or complexity involved in its creation, wrote Alvaro Vargas, Founder and CEO of Frontline. A common viewpoint back then was that all those wrapper businesses would be replaced by the frontier models themselves one day. For example, Cursor is an AI coding assistant built on frontier models’ APIs (in its case, Anthropic’s Claude). Wouldn’t Anthropic one day replace Cursor and help users with coding directly?

“GPT Wrappers” over ChatGPT?

The assertion that “GPT wrappers” have little moat is now being challenged. YC partners said during an episode of the Lightcone Podcast that calling a startup a “GPT wrapper” for building on top of OpenAI’s APIs is the equivalent of calling a SaaS company a MySQL wrapper for building on top of a SQL database.

In any case, the moat of frontier models is now being questioned. Since DeepSeek’s release of an open-source model that is free for everyone to use and has capabilities matching OpenAI’s o1. This really raised the question of whether billions of dollars in investment in frontier model development have been significantly impaired or whether ROI will ever make sense. Previously, people thought frontier models had moats in large capital requirements and technological leadership, but now, neither appears to be true.

Another uncomfortable truth for frontier model companies is that no single model can be the best at everything. For example, OpenAI's GPT-4 leads in voice interaction parity (87% indistinguishable from humans in blind tests), Anthropic's Claude dominates complex coding tasks (62% adoption among developers), and Google's Gemini maintains search integration advantages.

This plays into the advantage of AI application companies. Suppose I’m using Perplexity. Hypothetically, for the sake of illustration, let’s assume that OpenAI models answer physics questions best, Claude is best for coding, and Deepseek is best for writing. Then, depending on the type of question I ask, Perplexity will call on the model that is best suited for the task. As a consumer, I won’t care or even know which model Perplexity used, so long as the user experience is good (i.e, the answers are satisfactory). Now, imagine ChatGPT by OpenAI; they are limited to using the OpenAI models, which means they will give worse answers to some of the questions, which is, in turn, worse for the customer experience. All of a sudden, the “GPT Wrapper” seems to have the upper hand against GPT itself.

This is happening to many AI application companies, which are focused on solving specific issues with the best-suited model for the customers. This could be a paradigm shift regarding where value will be ultimately created and captured across the AI technology stack. This transition also means that competitive advantages or moats stem from effectively deploying AI with end customers rather than possessing advanced AI models. Success will increasingly depend on product-market fit and old-school execution rather than technological breakthroughs.

Much like during the Internet era, it wasn’t the ones who owned all the routers and optic cable infrastructure that dominated the consumer market; instead, it was the most innovative use cases that enhanced our whole experience of buying, food ordering, and taxi-hailing that won over consumers.

Similarly, the AI revolution's second act won't be about who builds the best brains but who designs the most indispensable tools with the best user experience.

Of course, the biggest caveat to the above prediction is that one frontier model company can come up with one model that rules them all, being best at all tasks and across all capabilities, and there is no way for other model companies to catch up. [Preview- I’m working on a story about China’s super app DNA x AI application]

Then, that company will build the best applications, leveraging its best model, which remains proprietary. However, we are seeing the industry moving in the opposite direction so far. For one, multiple frontier model companies are fiercely competing, and each is better at certain tasks. Meanwhile, Deepseek has quickly caught up to frontier model capabilities and even open-sourced its model.

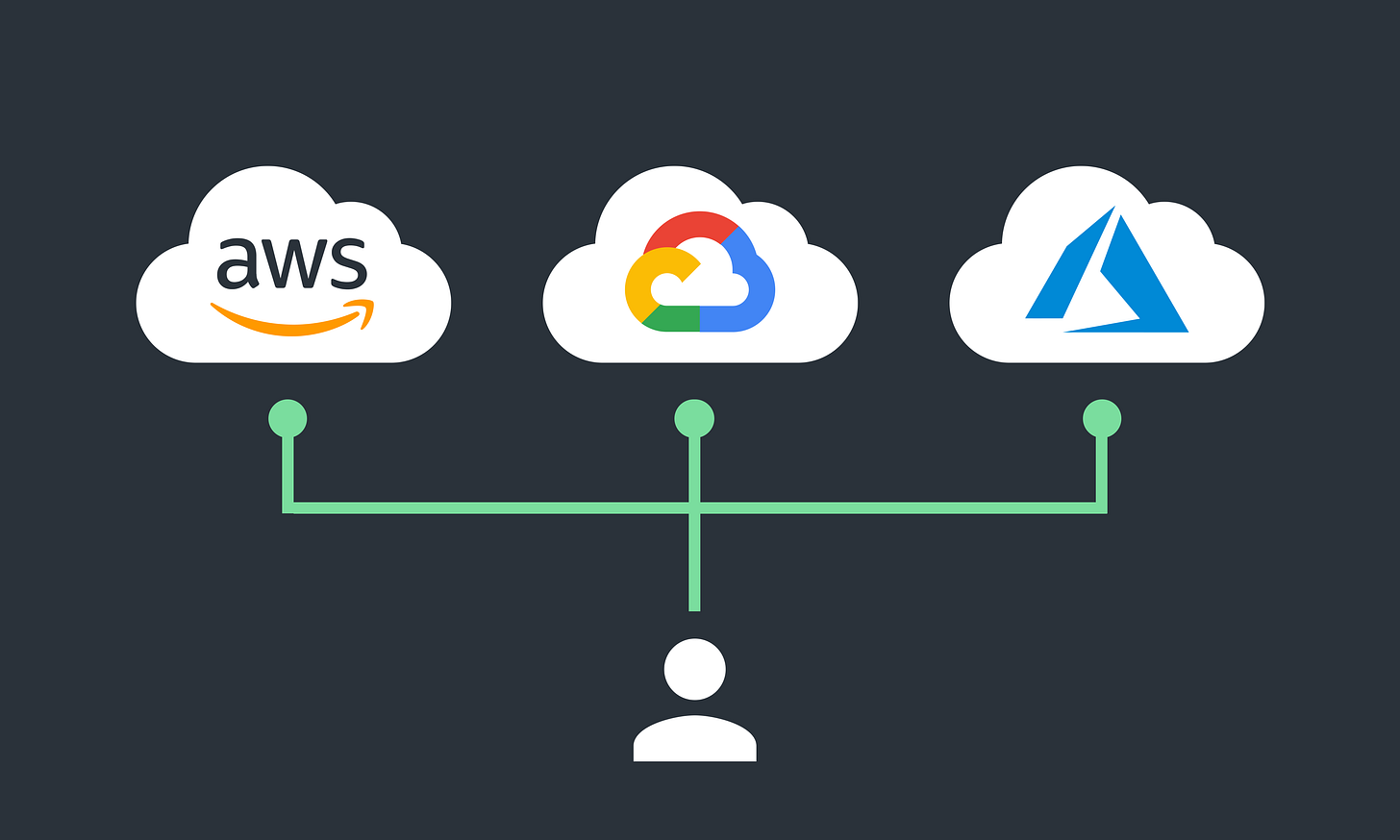

Model-Agnostic, just like Cloud-Agnostic

During the cloud era, hyperscalers like Amazon AWS, Microsoft Azure, and Google GCP have monopolized the cloud infrastructure layer. This layer requires a massive fixed investment in land, energy, and servers. However, their moats in the infrastructure layer did not translate into dominance in the application layer.

For example, when you use Netflix, would you care whether they host your data on AWS or Azure? Probably not.

For one, I had never checked which cloud provider Netflix uses before writing this article (spoiler alert: it is mainly AWS, so you can call Netflix an “AWS Wrapper”, I guess). What is more interesting is that despite Amazon having the leading position in the infrastructure layer while Netflix has none, Netflix hands-down beats Amazon’s Prime Video.

The same is true not only for consumer applications like Netflix but also for enterprise applications. For example, Salesforce is the leader in enterprise customer relationship management (CRM) software. Do Salesforce users care or know which cloud their data is stored on? Probably not. Similarly, neither Microsoft nor Amazon came up with the leading CRM applications, despite having such a huge lead in cloud infrastructure.

So, why did the cloud application companies win? The answer lies in vertical expertise and customer reach. Salesforce has been the leader in CRM with deep domain-specific knowledge (CRM for healthcare, CRM for finance, etc.) and seamless integration with customers’ existing workflows. Moreover, Salesforce was already the leading CRM provider before the cloud era, which means it has vast customer reach and distribution advantage.

I believe the same will play out in the AI era. First, as consumers and enterprises start getting used to the AI applications they like, they will become LLM-agnostic, just like they are cloud-agnostic in most cases today (caveat: obviously, not all clouds are created equal, and there are certain cases where customers will prefer one cloud provider to the other, but you get the point I am trying to make here). Second, existing application companies with domain knowledge, distribution, network effect, and high switching cost will likely remain winners in the AI era, leveraging multiple frontier models (just like how Salesforce remained the CRM leader in the cloud era, leveraging the cloud service providers).

I am not the only one who holds this view. Andrew Chan, a partner at a16z, explains better than anyone else that distribution and network effects are still key to competitive advantage at the application layer.

Future of frontier models?

One may ask, okay, maybe the frontier model companies aren’t application winners, but becoming the AWS, Azure, and GCP in the AI era is not a bad outcome. These three are massive businesses, after all! I’d say that was many people’s ambition before DeepSeek. Before, it was widely assumed that building frontier models would require a large amount of capital, just like building cloud data center infrastructure, which means only a few large players will dominate this space. However, what DeepSeek has shown is that the same frontier model capability can be achieved at much lower cost, and more importantly, it open-sourced its models (this is like someone invented a much cheaper server, and gives it away for free!). Frontier model companies are really in a tough spot.

I spoke to

, a cloud expert and former Microsoft Azure engineer, about whether comparing model-agnostic to cloud-agnostic made sense. He offered some of his wisdom, which I believe can serve as a roadmap for what the frontier model companies could do.In the early days, cloud providers didn't have a strong vertical focus, primarily focused on infrastructure-level services. “AWS, Azure, and to some extent GCP were more or less competing on price,” he added. However, AWS, Azure, and GCP developed advantages over time in certain verticals and use cases. Now, the reason an enterprise chooses one cloud server over another varies and can be complicated.

This is a valuable lesson for today’s frontier model companies. Differentiated model products ultimately can only come from differentiated expertise. So it seems like AI application developers now have three most likely outcomes: 1) develop sector or vertical specific advantages, 2) leverage and capture existing distribution and network advantage, or 3) wait to be commoditized.

[For more on U.S. vs. China’s cloud infrastructure comparison, see an article I wrote in collaboration with JS Tan.]

Additional thoughts

Please feel free to leave a comment or DM to share your thoughts on this: AI applications, as of now, are still being accessed through what we know - on edge through web pages or mobile apps. But what if someone comes up with a completely different way of engaging with AI, not just an interface but a completely new form factor that we’ve never seen? Think Nokia; they had apps through the early “smartphone” era (I had my red/white brick phone and thought I was the coolest person on the block; anyone here with me waawhaat?). Still, Apple reinvented what a smartphone means, which became the default form factor for mobile internet. This completely changed how mobile apps are designed, and the rest is history.